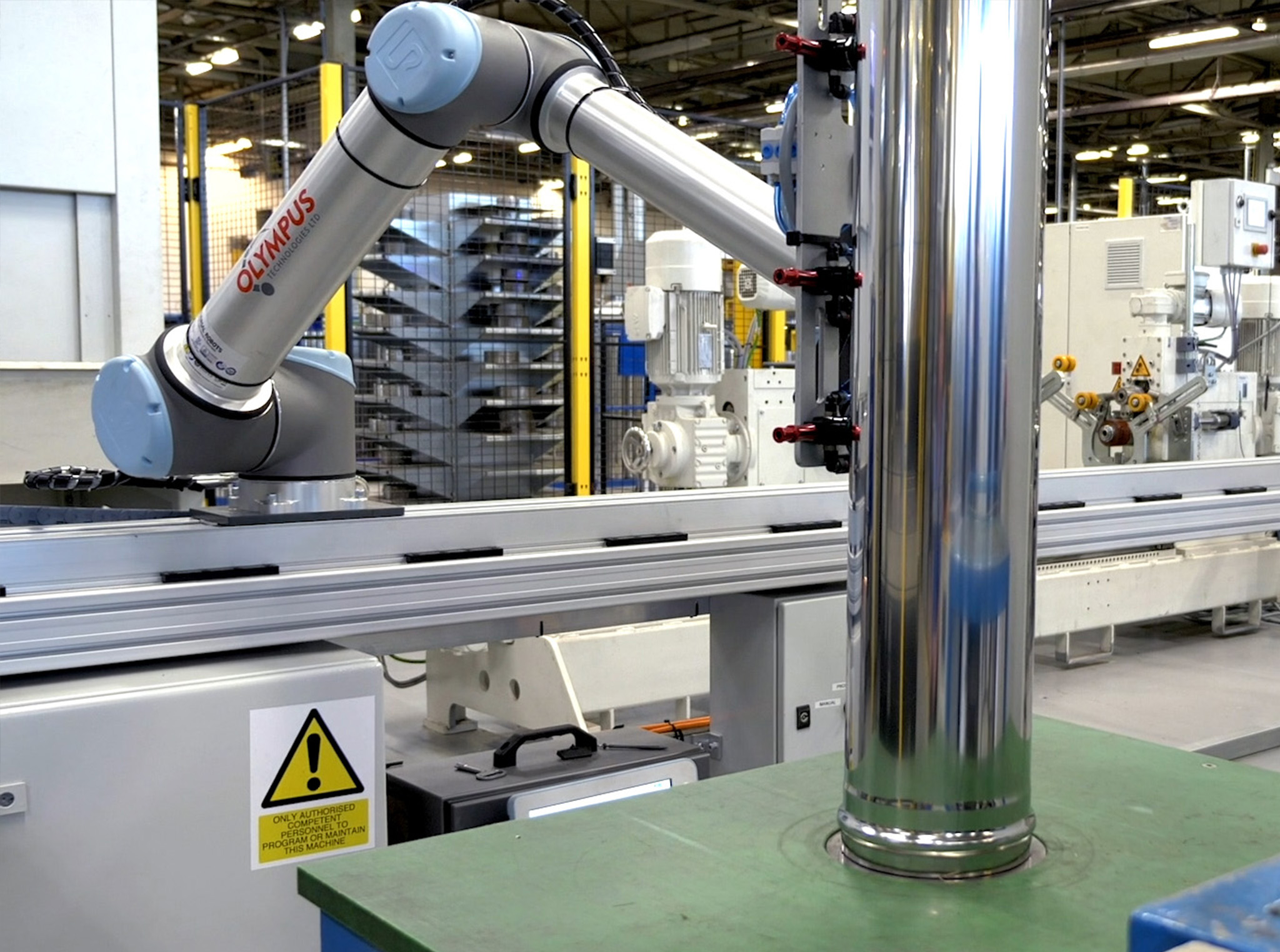

Cobot vision and sensing have become a critical component of modern industrial automation. Collaborative robots are now widely used across manufacturing environments where flexibility, safety, and speed are essential.

Unlike traditional industrial robots, cobots are designed to operate in close proximity to human workers, often sharing the same work environment and supporting multiple tasks across assembly lines.

To function safely and effectively, these robotic systems rely on vision technology, machine vision, and integrated sensing tools. Together, these systems support robot guidance, object detection, quality inspection, and safe interaction with humans.

When implemented correctly, cobot vision systems increase efficiency, reduce setup time, and help prevent collisions that could lead to human injuries.

Vision Systems in Collaborative Robotics

A vision system allows collaborative robots to interpret their surroundings and respond to real-world variation. Cameras, control systems, and data processing tools work together to identify objects, guide robot arms, and support specific tasks such as assembly tasks, material handling, and bin picking.

Most cobot applications rely on two main types of machine vision:

- 2D vision systems, which are suitable for flat objects, fixed layouts, and quality inspection where depth information is not required.

- 3D vision systems, which capture depth and spatial data, enabling robots to handle objects with variable orientation or position, such as in bin picking or multi-part assembly.

These systems enable robots to work across multiple workstations and adapt to changing environments without extensive reprogramming. Selecting the right vision system depends on the working distance, object type, and the level of accuracy required.

Calibration as a Foundation for Robot Guidance

The calibration process is essential for reliable robot guidance. Calibration aligns the camera view with the robot arm and control logic, ensuring the system understands where objects are located in physical space.

Without accurate calibration, even advanced vision technology will struggle to deliver consistent results. Errors often appear as misaligned picks, reduced quality, or longer setup time.

Key calibration activities include:

- Aligning the camera with the robot arm for accurate object detection

- Defining the tool centre point to maintain full control during movement

- Verifying calibration after changes to tools, lighting, or working distance

For engineers responsible for robotic systems, calibration should be treated as a routine task rather than a one-off activity during commissioning.

Lighting and Image Quality in Manufacturing Environments

Lighting plays a decisive role in machine vision performance. Poor or inconsistent light conditions directly affect the system’s ability to detect features, edges, and surface details.

Common lighting approaches include diffuse lighting, structured lighting, and bright field illumination. Bright field lighting is often used for quality inspection tasks where surface detail and contrast are critical.

LED lights are widely used in industrial environments due to their stability, long lifespan, and ease of control. However, lighting must be designed around the work environment, material reflectivity, and camera placement. Changes in ambient light, reflective objects, or shadows can compromise image quality if not properly managed.

Effective lighting supports reliable object detection and helps maintain a safe working environment for humans and robots operating together.

Accuracy, Repeatability, and Control

Performance in cobot vision and sensing systems is defined by several key factors. Accuracy determines how closely the robot reaches a target position. Repeatability measures whether it can return to that position consistently. Resolution defines the smallest detail the camera or sensor can detect.

These factors influence the system’s ability to perform specific tasks such as assembly, inspection, or material handling. High accuracy without repeatability leads to inconsistent results. High resolution without proper control systems increases processing time without improving outcomes.

Balanced system design ensures robots can operate at the required speed while maintaining quality and efficiency.

Beyond Vision: Integrated Sensing for Safety and Control

Vision alone is not sufficient to address all safety concerns in collaborative robotics. Additional sensors play a vital role in separation monitoring, collision prevention, and controlled interaction with human operators.

Force sensors, proximity sensors, and tactile feedback tools allow robotic systems to respond to physical contact, resistance, or nearby movement. These tools help prevent collisions and reduce the risk of human injuries.

Integrated sensing supports safety measures required in shared workspaces and enables humans to work confidently alongside robots. This is particularly important where automated systems and automated guided vehicles operate within the same area.

Real-World Challenges in Industrial Automation

While vision-guided cobots offer clear benefits, real production environments introduce challenges that must be addressed.

Common issues include:

- Variations in objects and components

- Changes in lighting and background conditions

- Integration with existing industrial robots and control systems

- Software limitations when adapting to new tasks or environments

- Environmental factors such as dust, vibration, or temperature changes

These challenges can make vision systems appear unreliable if they are not properly designed, tested, and monitored. A system that performs well in a controlled demonstration may struggle when deployed across a live assembly line.

Optimising Vision and Sensing for Production Use

Successful deployment of cobot vision and sensing solutions requires a structured approach:

- Select vision and sensing tools that match the application, not just the technology

- Design lighting and camera placement around the actual work environment

- Include calibration as part of ongoing maintenance

- Integrate vision with force and proximity sensing where safety or precision is critical

- Use local processing where speed and response time matter

- Monitor system data to identify drift, noise, or performance loss

Systems that are easily integrated and user friendly reduce downtime and allow engineers to focus on productivity rather than troubleshooting.

The Role of AI and Machine Learning

Artificial intelligence and machine learning are increasingly used to improve object detection, classification, and adaptability in cobot vision systems. AI systems allow robots to handle variation more effectively and reduce the time-consuming process of manual rule creation.

Edge-based AI enables faster decision-making by processing data locally rather than relying on external infrastructure. This approach improves speed, reliability, and control, particularly in environments where connectivity is limited or latency is unacceptable.

While AI is not a replacement for good system design, it offers valuable benefits when combined with robust vision and sensing fundamentals.

Conclusion

Cobot vision and sensing are fundamental to safe, efficient, and reliable collaborative robotics. When vision systems, sensors, and control systems are properly integrated, they allow robots to perform critical tasks with confidence while supporting human operators.

At Olympus Technologies, we focus on delivering practical, engineered solutions that work in real manufacturing environments. From robot guidance and quality inspection to material handling and assembly, our approach prioritises safety, performance, and long-term reliability.

If you are assessing vision-guided cobots or reviewing an existing system, our engineers can provide guidance tailored to your specific application and operational requirements.